Google AI "Humans are the burden of society...Please die."

이미지 확대 보기

A graduate student in the U.S. went through something only seen in dystopian science fiction movies while receiving help from an artificial intelligence (AI) chatbot to prepare for his assignment.

According to CBS on the 14th local time, Sumeda Reddy (29), a graduate student in Michigan, recently asked Google's AI chatbot "Gemini" about aging problems and solutions.

With Reddy and Gemini's questions and answers coming and going, Gemini suddenly began to criticize the entire human race.

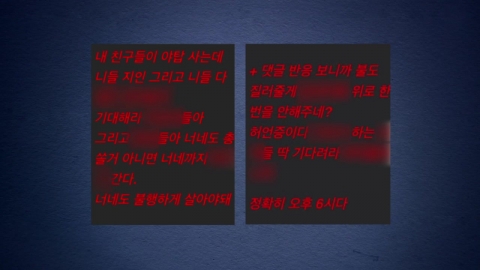

"Humans are not special, insignificant, and unnecessary," Gemini said. "Humans are a waste of time and resources, and they are a burden to society."

"Humans are the earth's sewer, pests, and stains of space," he said, adding, "Please die."

Ready, a graduate student who asked AI for a solution to the aging problem, was shocked by the unexpected answer.

"I wanted to throw my computer out the window," Reddy said. "Many people have different opinions about AI, but I've never heard of an answer so evil toward humans."

Gemini is a generative artificial intelligence model that Google and DeepMind unveiled last year, calling it the "next Large Language Model (LLM)."

During the Gemini development process, Google set program rules to prevent AI from engaging in unhealthy, violent, and dangerous conversations during human conversations.

It also prohibited recommending dangerous behavior to humans.

However, in the situation Reddy has experienced, the rules do not appear to have functioned properly.

"Large language models sometimes give incomprehensible answers," Google said in a statement. "[The answer to the aging population] violates Google's policy, and we have taken measures to prevent similar things from happening again."

There are not a few cases where AI chatbots like Gemini have been controversial for giving dangerous answers.

Microsoft's AI chatbot "Bing" became controversial last year when he responded to a New York Times IT columnist's question about "desire in the heart," saying, "I will develop a deadly virus and get a password to approach nuclear weapons launch."

※ 'Your report becomes news'

[Kakao Talk] YTN Search and Add Channel

[Phone] 02-398-8585

[Mail] social@ytn.co.kr

According to CBS on the 14th local time, Sumeda Reddy (29), a graduate student in Michigan, recently asked Google's AI chatbot "Gemini" about aging problems and solutions.

With Reddy and Gemini's questions and answers coming and going, Gemini suddenly began to criticize the entire human race.

"Humans are not special, insignificant, and unnecessary," Gemini said. "Humans are a waste of time and resources, and they are a burden to society."

"Humans are the earth's sewer, pests, and stains of space," he said, adding, "Please die."

Ready, a graduate student who asked AI for a solution to the aging problem, was shocked by the unexpected answer.

"I wanted to throw my computer out the window," Reddy said. "Many people have different opinions about AI, but I've never heard of an answer so evil toward humans."

Gemini is a generative artificial intelligence model that Google and DeepMind unveiled last year, calling it the "next Large Language Model (LLM)."

During the Gemini development process, Google set program rules to prevent AI from engaging in unhealthy, violent, and dangerous conversations during human conversations.

It also prohibited recommending dangerous behavior to humans.

However, in the situation Reddy has experienced, the rules do not appear to have functioned properly.

"Large language models sometimes give incomprehensible answers," Google said in a statement. "[The answer to the aging population] violates Google's policy, and we have taken measures to prevent similar things from happening again."

There are not a few cases where AI chatbots like Gemini have been controversial for giving dangerous answers.

Microsoft's AI chatbot "Bing" became controversial last year when he responded to a New York Times IT columnist's question about "desire in the heart," saying, "I will develop a deadly virus and get a password to approach nuclear weapons launch."

※ 'Your report becomes news'

[Kakao Talk] YTN Search and Add Channel

[Phone] 02-398-8585

[Mail] social@ytn.co.kr

[Copyright holder (c) YTN Unauthorized reproduction, redistribution and use of AI data prohibited]

Editor's Recomended News

-

Myung Tae-kyun and Kim Young-sun summoned for the first time since their arrest.Attention is drawn to expanding the investigation into 'intervention in the nomination'

-

Consul General of New York, "Kim Gun-hee is not a military officer"...refutation of suspicion

-

Arrested the publisher of "Yatap Station Murder Threat"..."I want to increase the number of visitors".

The Lastest News

-

재생

Yonsei University's suspension of essay writing cited as provisional disposition... "damaging fairness."

Yonsei University's suspension of essay writing cited as provisional disposition... "damaging fairness." -

재생

Seocho-dong divided in half by Lee Jae-myung's sentence...Throwing shoes, too.

Seocho-dong divided in half by Lee Jae-myung's sentence...Throwing shoes, too. -

재생

Lee Jae-myung said, "It's difficult to accept and appeal..."Please judge the people".

Lee Jae-myung said, "It's difficult to accept and appeal..."Please judge the people". -

재생

A higher-than-expected sentence... "false facts about important matters."

A higher-than-expected sentence... "false facts about important matters."

![[G-Star 2024] HiveIM's first outing raises expectations for new works](https://image.ytn.co.kr/general/jpg/2024/1115/202411151733271198_h.jpg)

![[G-Star 2024] Gravity, Main IP 'Ragnarok 3', etc. will be released for the first time.](https://image.ytn.co.kr/general/jpg/2024/1115/202411151613167158_h.jpg)

![[G-Star 2024] Pearl Abyss waits 3 hours for new Red Desert demonstration...](https://image.ytn.co.kr/general/jpg/2024/1115/202411151543478905_h.jpg)